Telemetry: a big opportunity to approach scaling in the cloud – Part 2

Image by Emma Gossett – Unsplash

What’s under the hood? How to instrument your deployed application using Azure Application Insights? What assets does OpenTelemetry bring? How to extract the component for scaling using telemetry?

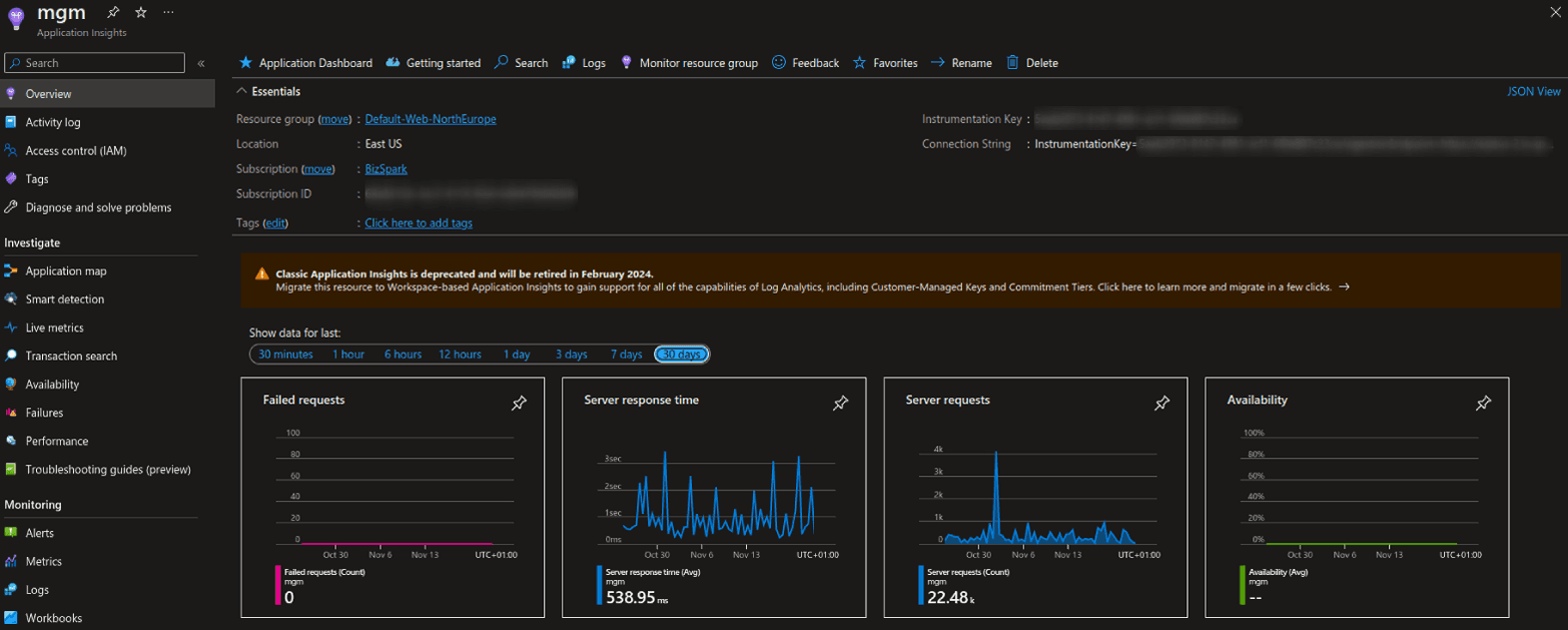

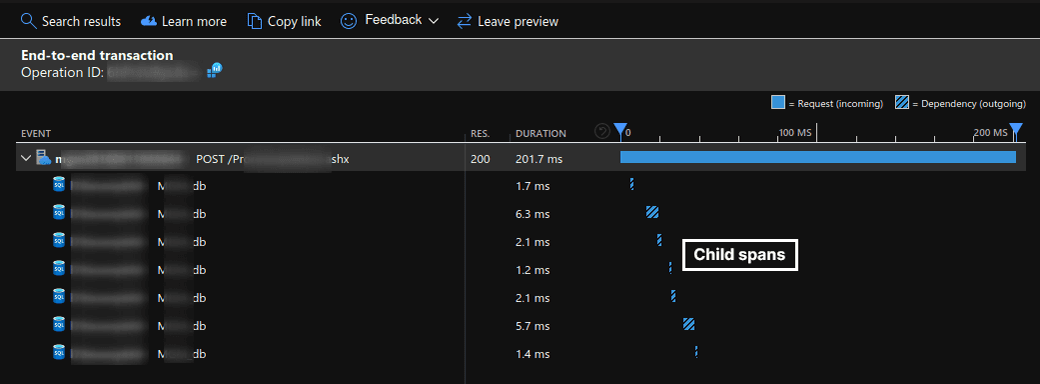

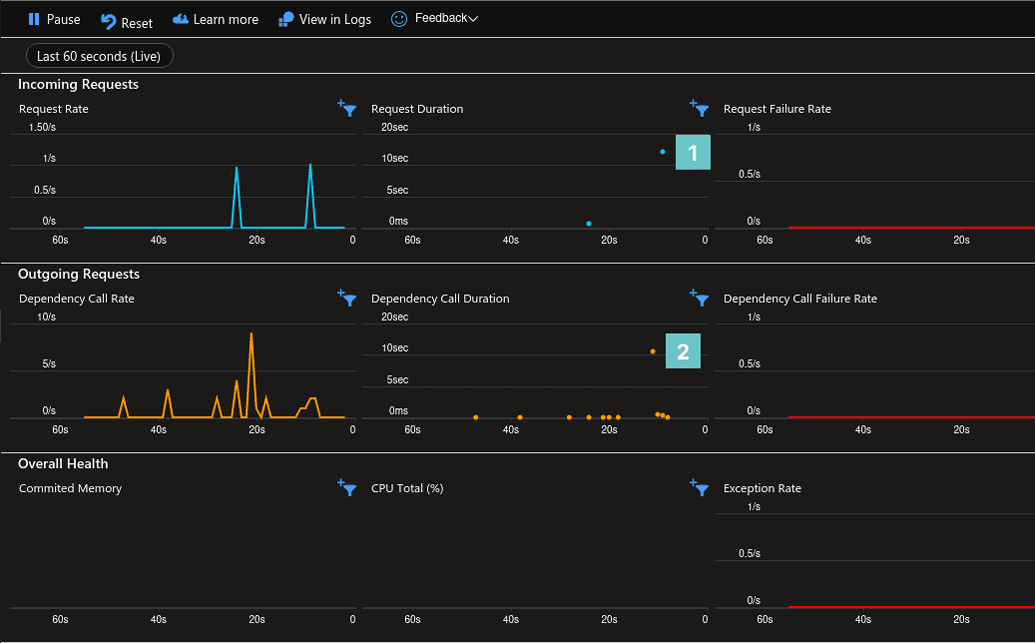

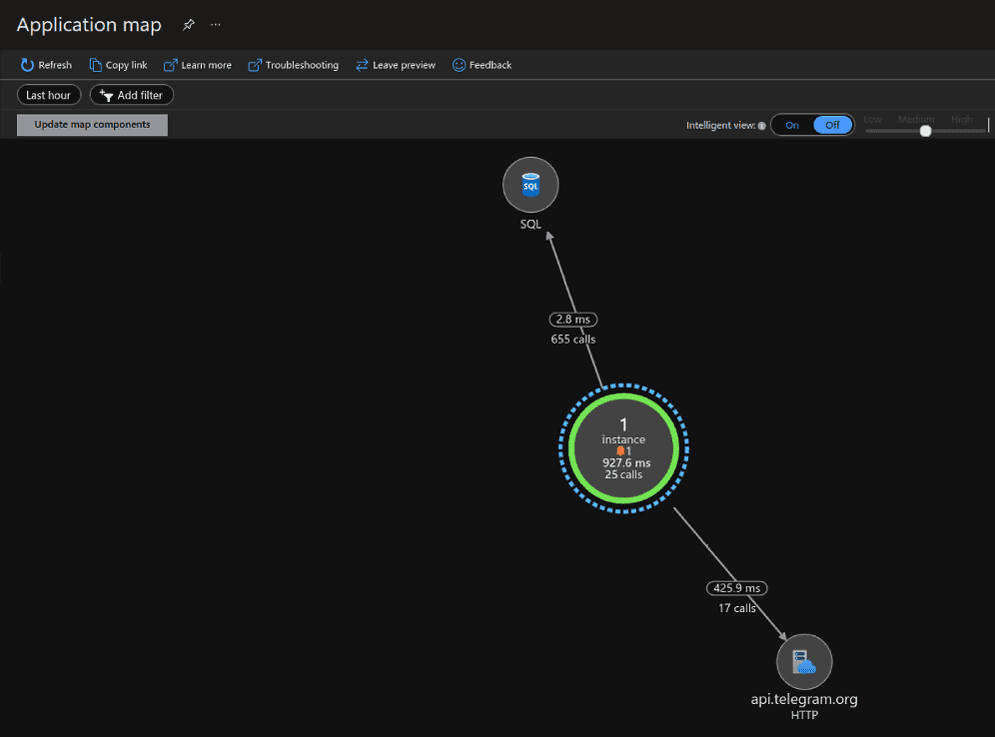

Azure Application Insights (AI)

If your application is hosted in Azure, we have good news. You can instrument your already deployed application with no code modifications at all using Application Insights.

One more popular tool to compare is Datadog.

OpenTelemetry

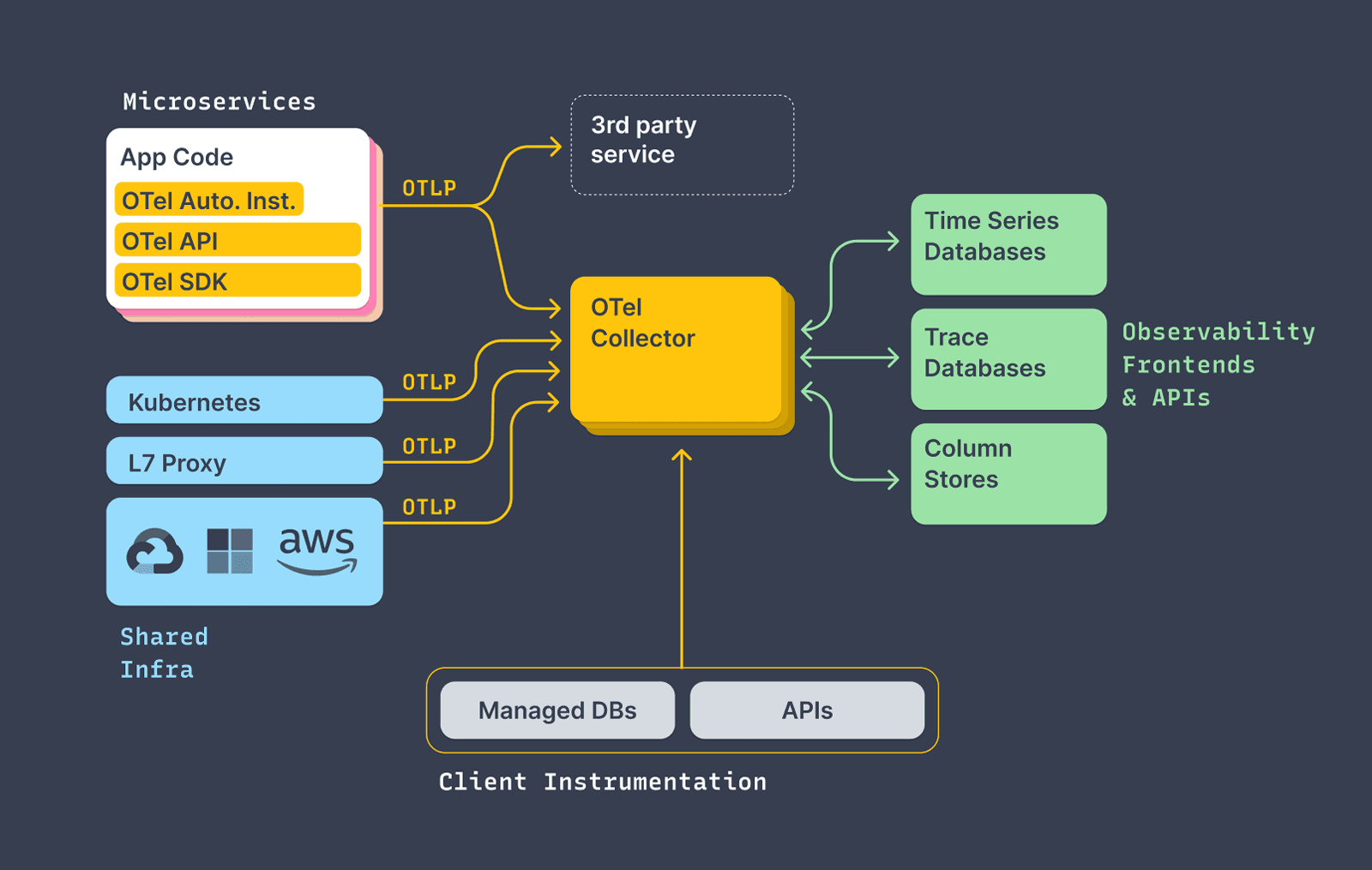

While the number of tracing tools continues growing, the request to standardize the data format and API of the tracing is coming. OpenTelemetry is being developed for that reason as it is a set of open standards, SDKs, and tools. Check the huge volume of supported products in the registry.

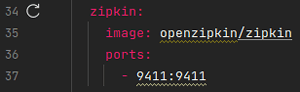

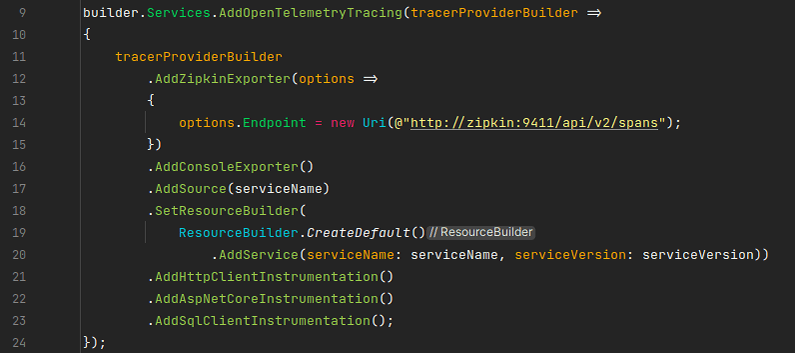

OpenTelemetry components can be containerized and reside in the Kubernetes cluster for testing and debugging purposes. For example, injection of Zipkin (a collection and visualization tool) is only two lines at the configuration file:

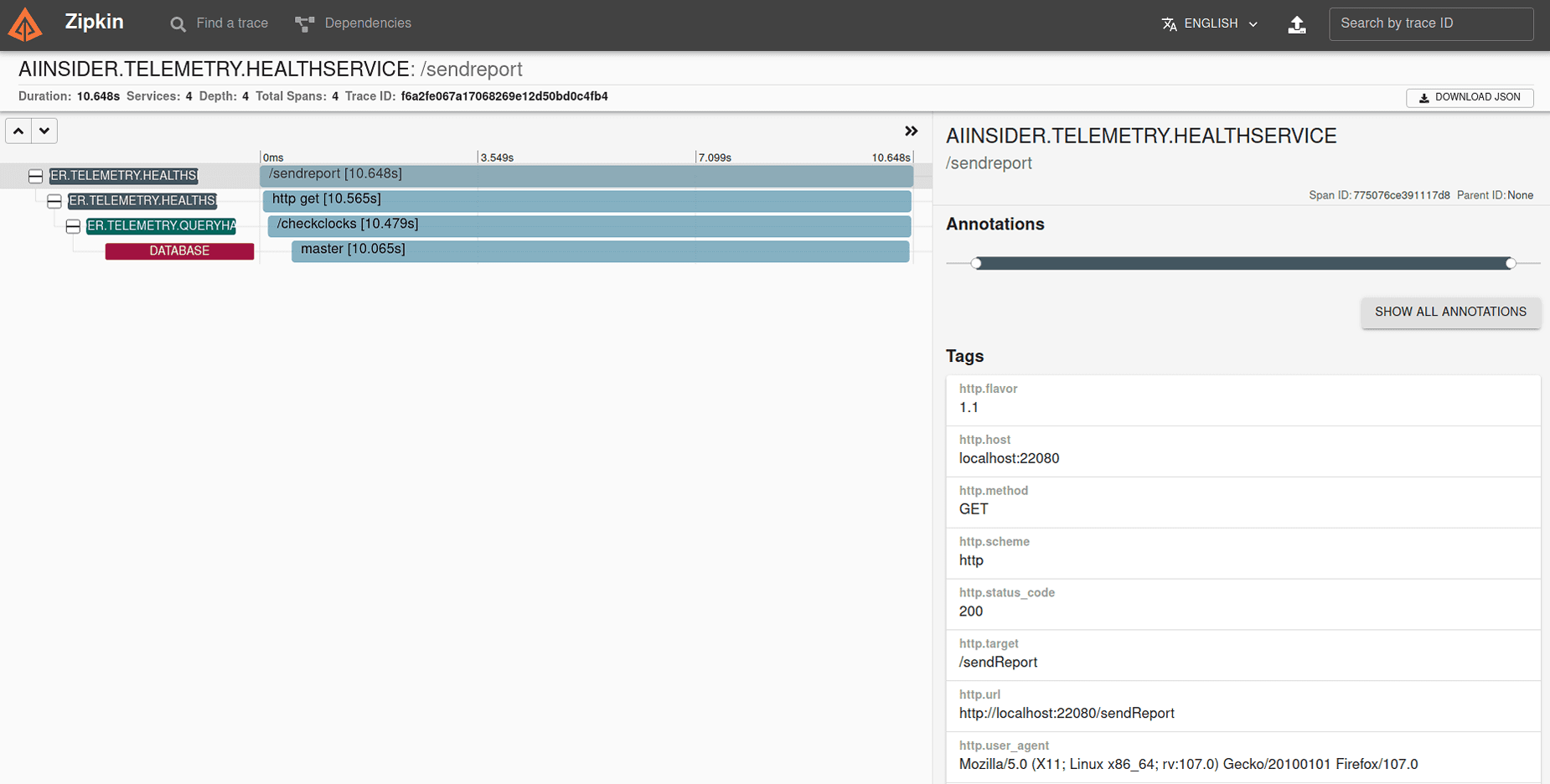

Data can be exported to a tool of our choice. Here is how Zipkin looks like:

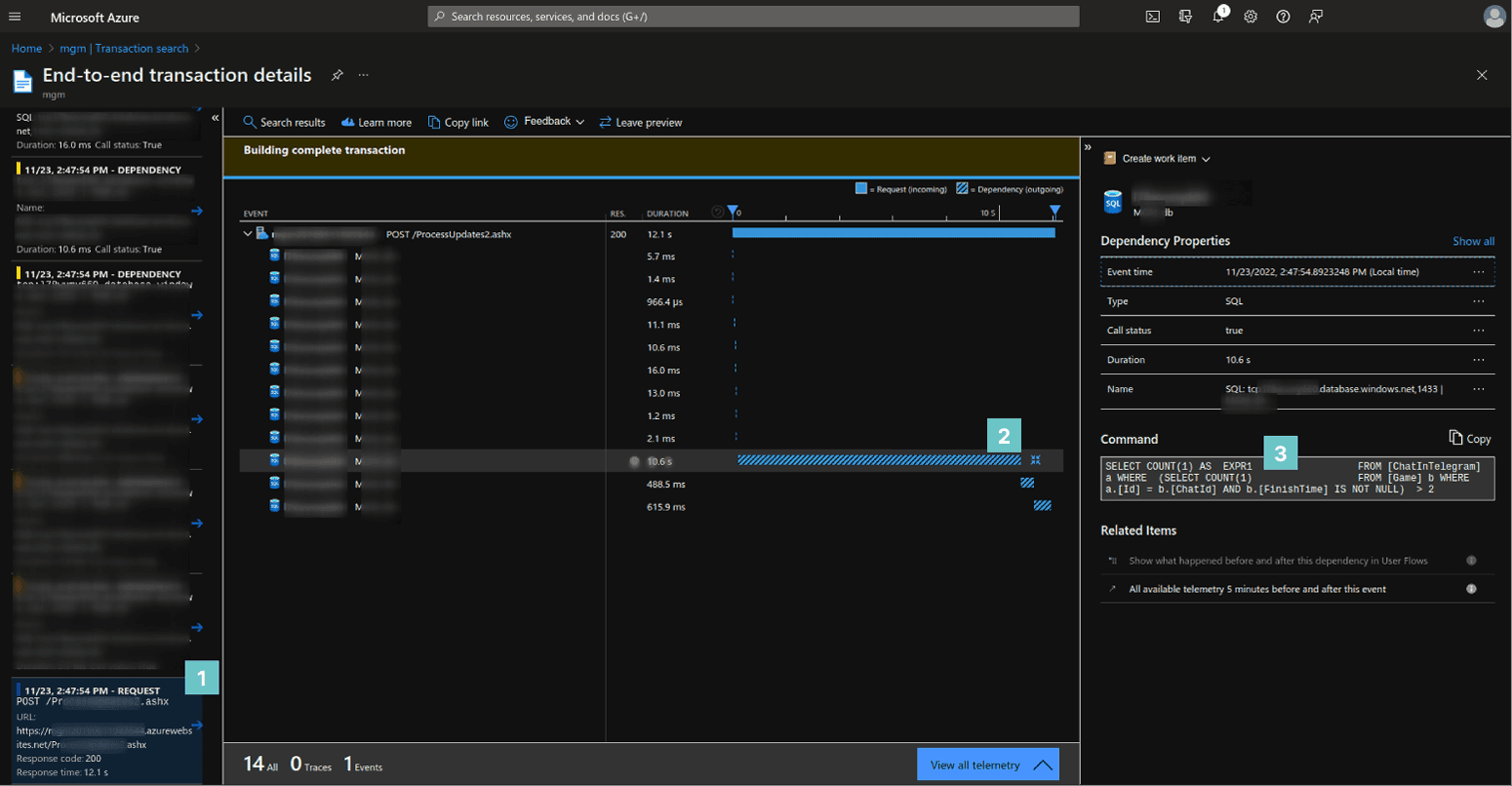

Extracting the component for scaling

Next, we take the code of that slow process and move it into a separate newly created service. It is necessary to add some code for serialization, validation, and application domain maintenance. The request processing may be possibly slowed down, but we can now scale it! To control that, we want to introduce the Load Balancer, which will start adding instances to the requests number growth at a time.

Having that, we allow more users to make more requests to be served within the same period. The only output metric that grows with the number of users is the hosting cost.

Later, once the application can scale, we should also give attention to keep testing the scaling. Azure has a feature called Load Testing for that. If we know our users’ behavior, we can define the appropriate test data to check how that behavior is preserved under the heavy load. This testing can run before pre-release deployment or even for pull request validation.