Telemetry: a big opportunity to approach scaling in the cloud – Part 1

Image by Emma Gossett – Unsplash

What’s under the hood? What flavors of scalability to consider in the cloud? How to find the right process to extract for scaling using telemetry?

Context

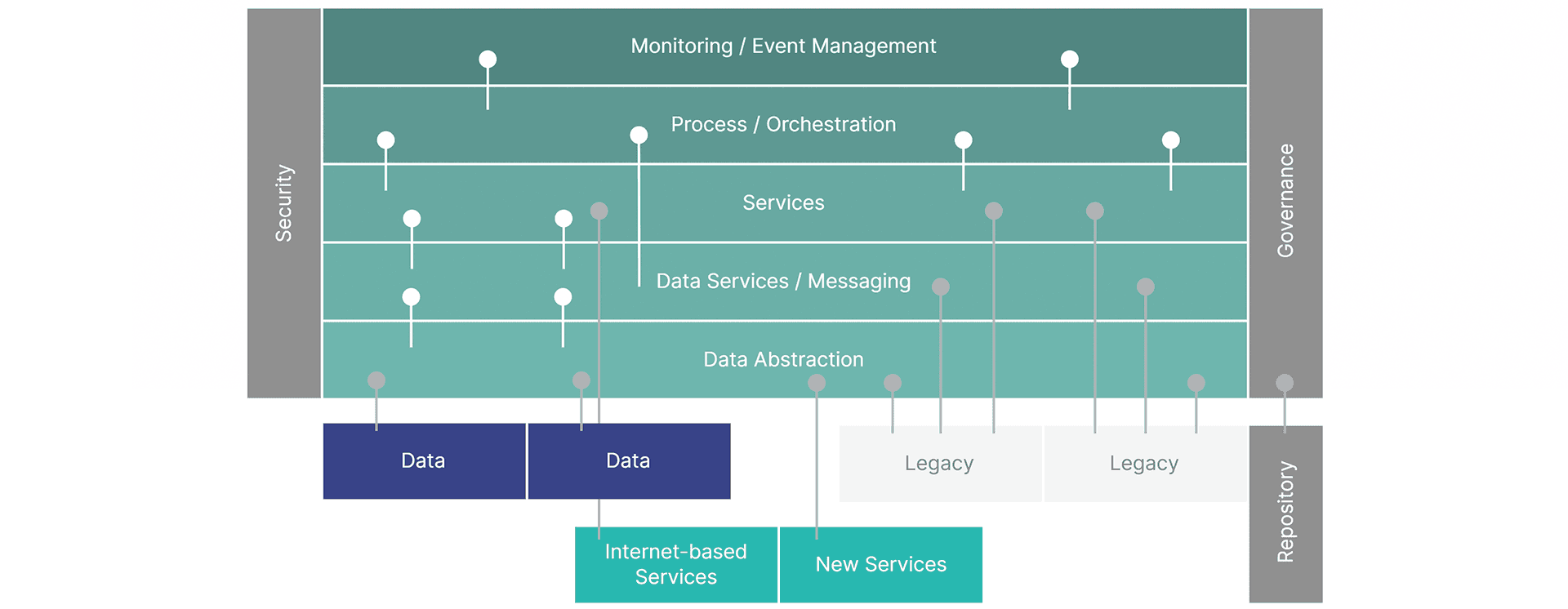

While the topic of microservices is tightly bound to the scalability, our consideration should work for SOA-like systems (Fig. 1) as well as monolith and various combinations.

For simplicity, we assume that our application is already in the cloud, like in Azure. Some examples are .NET-based, but the idea still applies to other frameworks.

When speaking about scalability, we usually consider two different flavors of it:

-

Vertical scaling is when we increase the capacity of the “hardware” resources (e.g., more CPU time, faster memory bitrate). Plus, as we are discussing the performance, we briefly cover the optimization topic too.

-

Horizontal scaling (which also includes Geo-redundancy) is when the number of instances of a particular service increases.

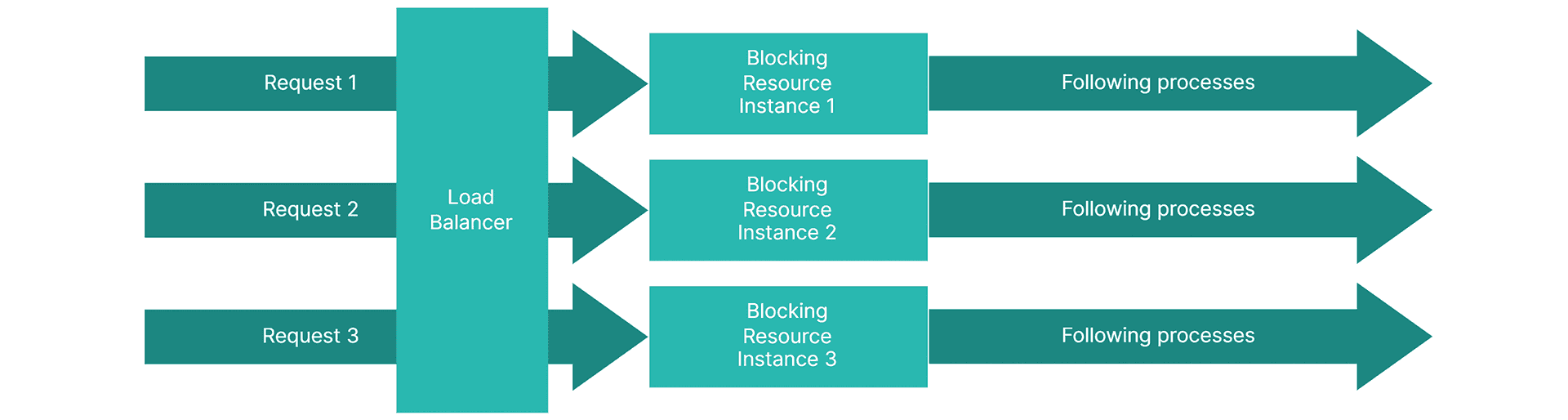

From the two of them, horizontal scaling is more difficult. The reason? In most cases, we cannot scale the entire application because of the need for some synchronizations and cost savings. Instead, we want to extract some particular process into a separate service and scale it independently. This approach also speeds up the service recovery after a failure, which means less chance of an outage for our users. But how do we find the process that is working too slowly?

Finding the right process to extract for scaling

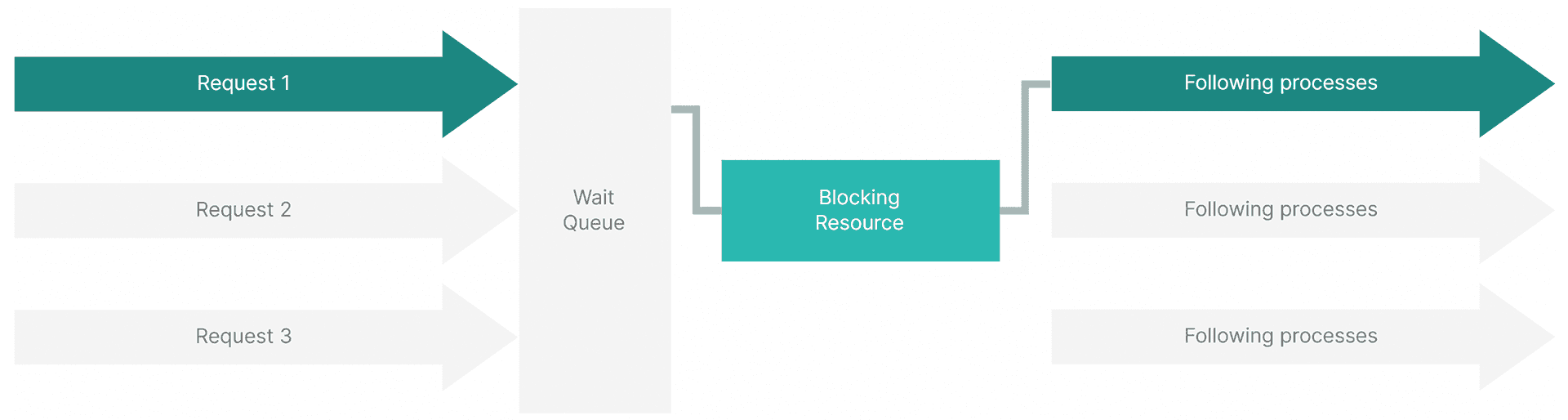

We usually come into question of scaling due to poor performance of particular processes in the system. This may happen with the increase of the complexity of those processes or the increase of the number of users requesting them at a time. Both result in more requests coming into critical-like sections (which is, roughly, a blocking resource with possible access by only one tenant at a time). In this case, the requests are put into the wait queue more often, thus adding overall average response time – in other words a bottleneck.

When tenants are spread geographically, we want to define one or several services for each database (to have them geographically closer to the user – please see Database sharding).

In these cases, the bottlenecks and the queues can come into play with various combinations and are possibly not simple to trace. Let’s see how telemetry can help with that.

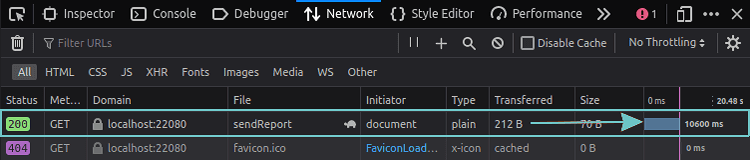

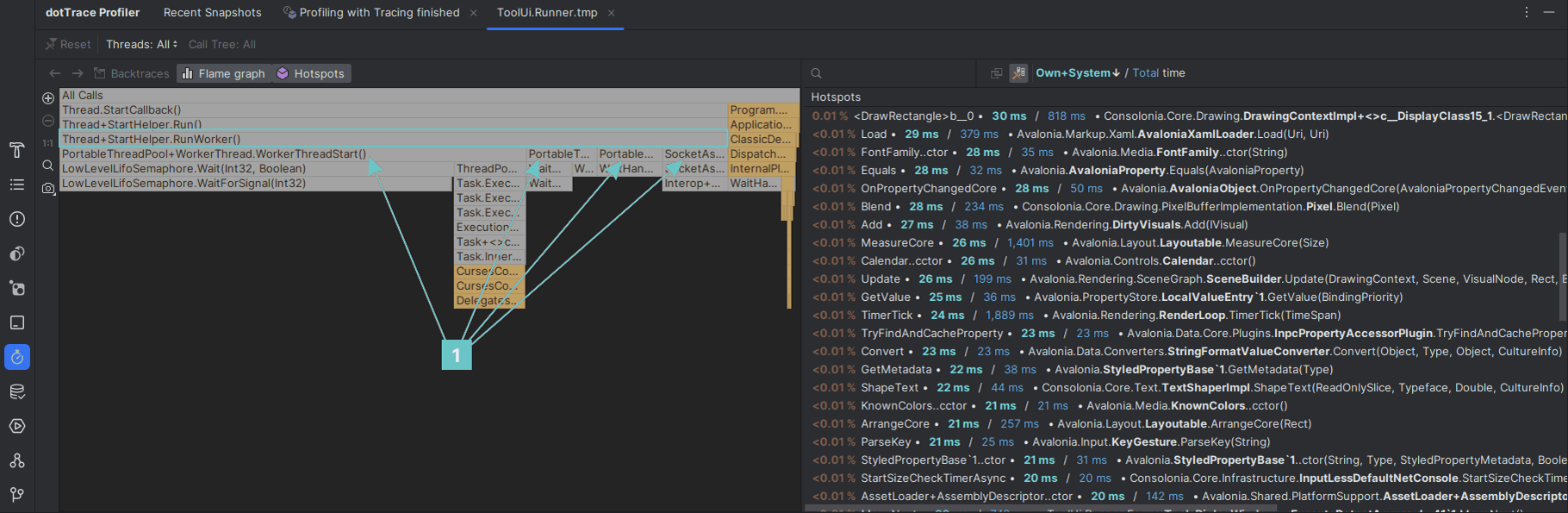

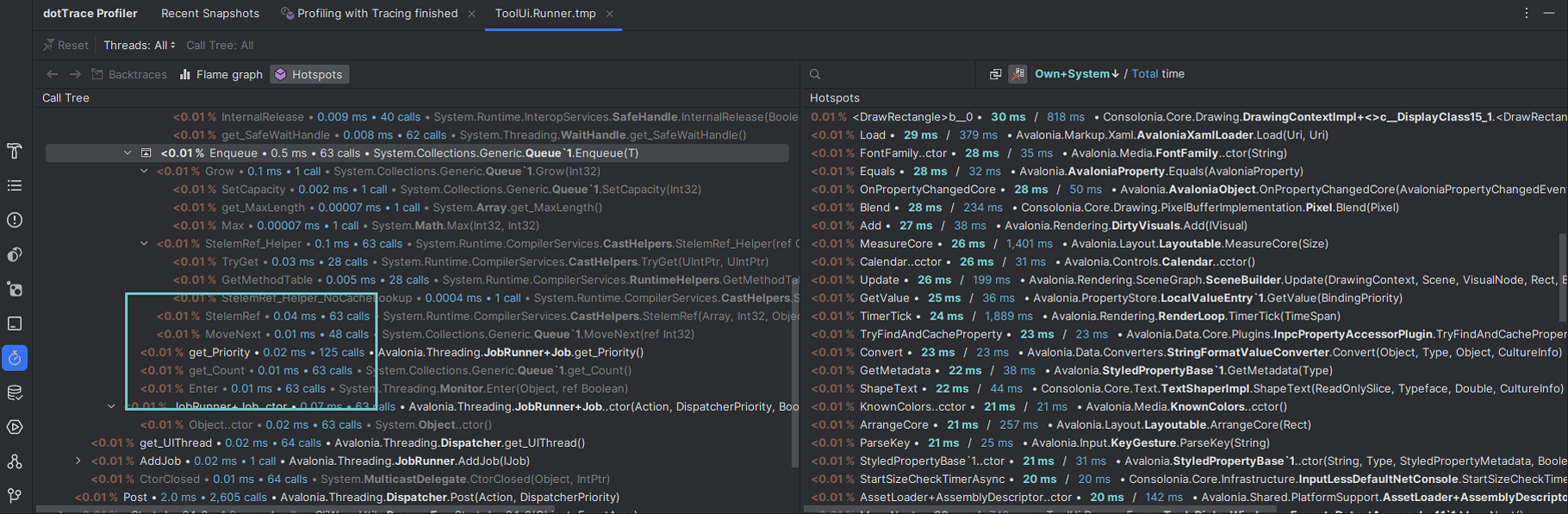

There, we can find the timing that we are not satisfied with, and then for more details we can attach the profiler, which measures all the functions in our application.

Profiler visualizes the slowest spans for us and also spans which they consist of. We can see exactly how much time was spent on each function.

Here, we can also examine the optimizations of the code itself. Something can easily get fixed by resolving programming mistakes. Something can be removed by introducing caching or indexes, or we can try to convert the function to asynchronous code or, oppositely, to synchronous one where necessary.

At this point, it’s also possible to spot the complexity of our algorithms that is too high. Special sampling mode shows us both the time taken and the number of calls.

Additionally, not as much spoken, we can also profile the memory consumption. Memory profiling not only gives the understanding of how to increase the speed, but also it is an argument to scale itself. For .NET, please check dotMemory by JetBrains or similar tools.

These profiler features open the bigger picture on performance. However, it can be very different from the production stages of the application. Users may be invoking different processes more or less frequently than tested. Luckily, we have a number of tools to see traces in the production, and some even allow us to observe the system in real time.

What tools? Let’s start with Azure. Actually, let this knowledge sink in for a bit and then come back for Part 2 of this article.